Agentic AI: A new way of doing work

February 09, 2026

During the launch of GPT-5, OpenAI CEO Sam Altman predicted that by 2028, we would experience the one-person $1 billion company. We have heard similar sentiments from other leading AGI (artificial general intelligence)- chasing industry leaders. Will this turn out to be the snake charmers’ advertisement for snake oil, or the unleashing of AI-driven hyperproductivity, which remains to be seen.

$1 million in solo entrepreneurship has become quite common, particularly in the tech and e-commerce sectors, but can AI provide a 1000x force multiplier? The time has come to critically scrutinize this claim and determine the foundational steps needed to take advantage of it.

To add some context, when Meta (then Facebook) acquired Instagram in 2012 for $1 billion, it had a headcount of 13 and zero revenue. But Meta saw the potential revenue stream and justified the price tag.

Now the question is: can a solo-founder find such a revenue-generating idea and develop an AI-driven framework to replace 12 headcounts? If we broaden the scope of this question, we can ask whether small and large businesses can reduce their workforce and still maintain the same level of productivity, or, in extreme cases, improve it?

As the consistently high Fed interest rates over the past few years kept borrowing costs high for US tech companies, we have seen multiple large layoffs across FAANG (Facebook, Amazon, Apple, Netflix, and Google) and similar companies as part of cost-cutting initiatives. One of the key challenges in these layoffs was removing low-performing team members while maintaining high team productivity.

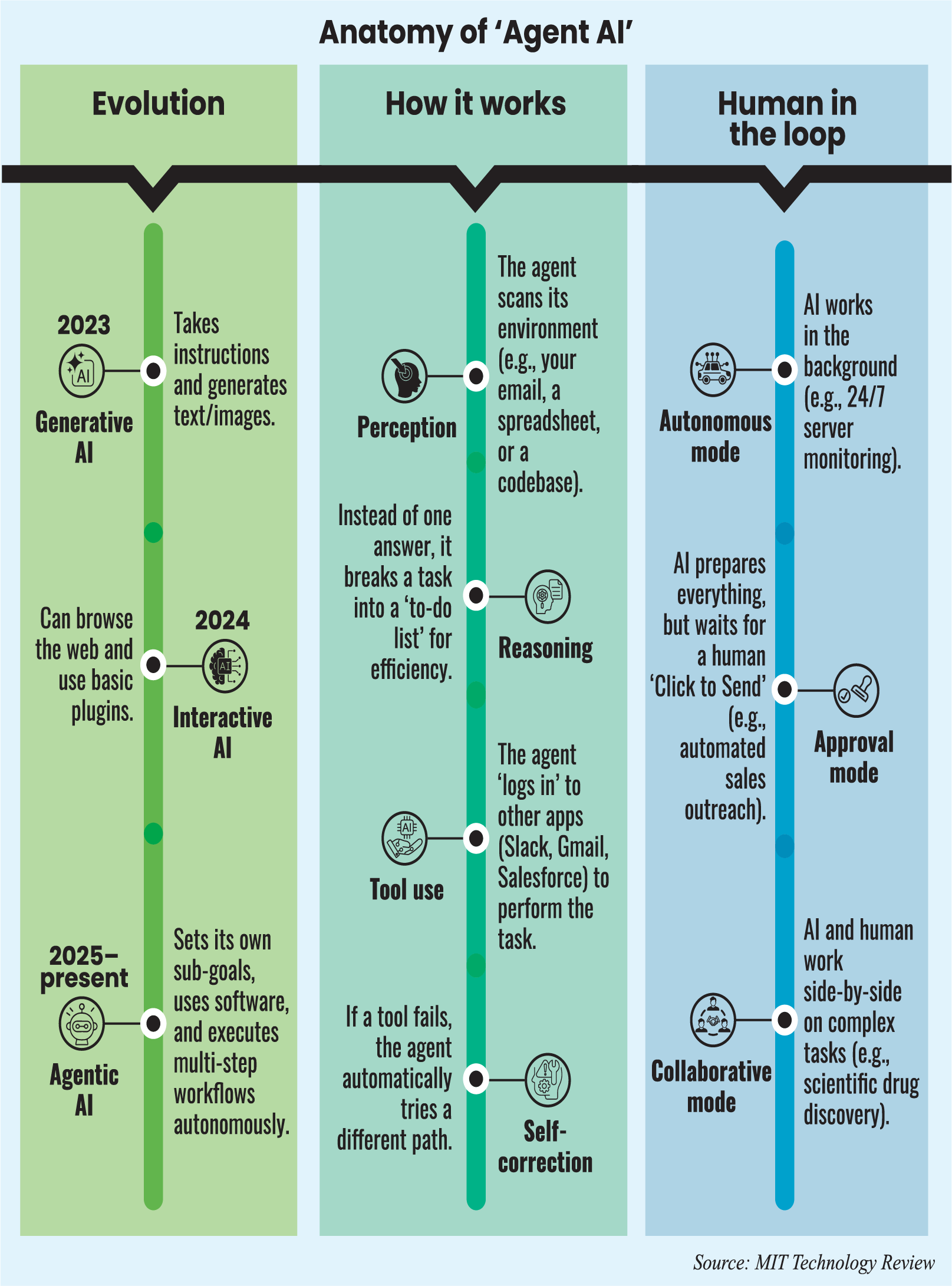

As senior leaders searched for a path forward, a new way of doing work emerged in 2024: a system of autonomous computer systems (agents in popular parlance) integrates with human team members, offloads most routine and low-ambiguity tasks, and keeps the wheel moving. This framework to augment human and autonomous systems is now known as agentic AI.

Traditional AI primarily operates on a narrow scope. It relies on multiple machine learning models and responds to user commands, including analyzing data, predicting outcomes, and performing inference.

Agentic AI, unlike its counterpart, which requires constant human intervention and operates within predefined constraints, can exhibit autonomy, goal-driven behavior, and adaptability. With the advent of generative AI, we have seen AI models’ incredible ability to generate text, images, and videos.

Agentic AI, supercharged with GenAI, takes this to a higher level by applying the generative outputs to a specific goal. It often uses feedback from the process to improve the generative output and finally achieve the goal.

For example, a generative AI system can be used to generate code to fix a bug, where an agentic AI system can take the code, run the tests, use the feedback from the tests to improve the code, often in multiple cycles, and then finally deploy the fix without breaking any existing functionality.

There are several key components that enable the agentic AI system to observe, reason, and act. It starts with gathering information from its surroundings, which may involve analyzing texts, images, and quantitative data. Because of the need to ingest information from diverse sources, most agentic AI systems are multimodal.

After perception, it employs large language models (LLMs) to understand the context from the gathered data, identify key information, and formulate potential solutions.

The next step involves breaking down large prompts into smaller steps and developing a plan to solve these small problems. Based on the plan, it then acts, which may involve performing some tasks, interacting with other systems, or making some decisions.

After the action, it can review the results and use the feedback to perform the continuous cycle of perception, reason, plan, act, and reflect until the goal is achieved. In a sense, this is exactly how a high-performing individual operates; now the only difference is the availability of an army of agents who can operate 24/7 with the same level of operational efficiency.

The nay-sayers will point out that there are so many subtle things a high-performing individual does on the job that it will be virtually impossible to replicate them with an agentic AI system.

Let us look at an example case to examine that claim. Let us think of a nationwide retail chain of consumer goods and groceries that is trying to integrate agentic AI into its day-to-day operations in the supply chain and logistics division to improve efficiency. Some key responsibilities of this division will be demand forecasting and replenishment, supplier management and procurement, logistics and transportation arrangement, and warehouse operations.

The division can create an AI agent (the building block of agentic AI) with a specific mandate of demand forecasting and replenishment. It can continuously ingest POS sales, promotions, holidays, weather, regional events, social trends, and detect demand shifts before weekly forecast cycles. It can also flag SKUs/stores with abnormal demand patterns.

In a traditional workplace, users might get a dashboard with all these stats, and then there needs to be meetings among associates to discuss these observations and determine appropriate actions. However, in agentic AI-integrated workplaces, APIs will connect the ERP and store SKU management systems so that these observations can be turned into actions with minimal human supervision.

The system can adjust short-term forecasts easily and notify planners only when confidence thresholds are crossed. It can monitor store-level stock and lead times and automatically generate purchase orders or inter-store transfers within approved limits, leading to lower stock-outs and reduced over-ordering.

With an empowered, agentic AI, many man-hours can be saved that would otherwise be spent creating spreadsheets and charts. The human team members can focus more on strategy and exceptions, rather than on laborious data curation.

If this sort of enterprise-wide transformation is needed, we must change our traditional technology view. Until now, we have thought of tools automating tasks, people making decisions, and strategy determining how people and technology work together.

However, the dual nature of agentic AI creates strategic tensions across several dimensions when adopted. One of them is along the flexibility axis.

However, the dual nature of agentic AI creates strategic tensions across several dimensions when adopted. One of them is along the flexibility axis.

While human workers are adaptable and traditional AI operates on a narrow margin, an agentic AI system sits in between. The challenge is to design business processes with intermediate flexibility so they can scale effortlessly without sacrificing the ability to handle edge cases and system failures.

Another tension is between experience and expediency. While traditional AI tools are easy to adopt, to reap the full benefit of an agentic AI system, we need to allow the system time to learn and adapt. This tug-of-war between the need for something that works now and the long view of AI investment needs to be resolved when setting up an organization’s AI strategy.

Another key issue is the level of autonomy. Current AI tools are fully owned and controlled by human workers. What sort of autonomy and supervision an agentic AI system will be allowed to operate with is up for debate.

Too tight control will curb agents’ productivity and create bottlenecks in the process, while too much freedom can cause catastrophic failures. Organizations must assess their processes and establish an AI governance framework to scale agentic workflows safely while preventing runaway systems.

Another key trade-off is choosing between retrofitting legacy systems vs reengineering. While retrofitting requires less capital and offers faster return, the latter offers more AI-native business processes that can survive the rapid changes in the AI technology landscape.

It is safe to say that future successful organizations will have agentic AI embedded in their DNA. For any organization to get there, it must go through gradual steps. The workforce must become comfortable with the new way of working.

It needs to ask: if they want to start from scratch in today’s technological climate, what would the work look like? These reimagined workflows should be the goal, and for some, we can take an iterative approach, while others can outright replace the existing ones.

This is where upskilling for the leaders and workers comes into play. The AI-literate leadership can quickly assess the pros and cons of various options and choose the right ones that align with organizational goals. An AI-aware human workforce can recognize the technology’s true value, embrace reimagined workflows, and participate in the disruption in a symbiotic way with AI agents, rather than resisting and reverting to old ways of working.

Leadership buy-in is a must to secure long-term commitment to investing in AI, and workforce buy-in is necessary to prepare agents using human expertise for the reimagined processes and to unleash productivity.

If we set aside clickbait like “Is AI coming for your job?” and critically assess the claims and capabilities of current and near-future agentic AI frameworks, this dual-natured technology comes with its own unique challenges.

For leaders, it is not a technological challenge but an organizational one: redesign business processes with enough flexibility to allow agentic systems to have autonomy while establishing human guardrails to prevent catastrophe. Too little autonomy will limit the system’s capability, and too much of it without ethical governance can result in unintended systemic failures.

Some organizations treat it as a way to reduce costs, while others aim to increase overall productivity; leadership must provide clarity on its AI vision and design processes accordingly.

Human workers, rather than viewing these artificial coworkers as competitors, should see them as members of the same orchestra. AI literacy and upskilling are necessary to raise the baseline performance of human workers and reduce the strategic tensions emerging as AI adoption proceeds.

Ultimately, a new way of working will prevail, where AI-human teams will usher in a new age of productivity, and not participate in it because the fear of the unknown can pose risks to any organization’s survival.

Sameeul Bashir Samee is a computational scientist currently working at the National Institutes of Health in the United States.

sameeul@gmail.com

Most Read

Starlink, satellites, and the internet

BY Sudipto Roy

August 08, 2025

What lack of vision and sustainable planning can do to a city

A nation in decline

From deadly black smog to clear blue sky

Understanding the model for success for economic zones

How AI is fast-tracking biotech breakthroughs

Environmental disclosure reshaping business norms

Case study: The Canadian model of government-funded healthcare

A city of concrete, asphalt and glass

Does a tourism ban work?

You May Also Like